Why You Should Implement Scheduled Reporting

Investing time into automation can be extremely beneficial for you to achieve increased results, with less effort over time. Gartner has suggested that any manual task that is done more than four times a year should be automated. That may be on the extreme end because there are certain pitfalls that need to be avoided. The below image shows how people can spend too much time optimising and reviewing without actually saving any time.

Although this is a perfectly valid scenario, that occurs frequently, it shouldn’t scare you off automation. Here at Opmantek, we believe we have the tools to make Network Automation easy for you. There are too many individual ways to outline in one post, so this post will look at a single part and how it can make your life easier.

Open-AudIT’s Scheduled Reporting

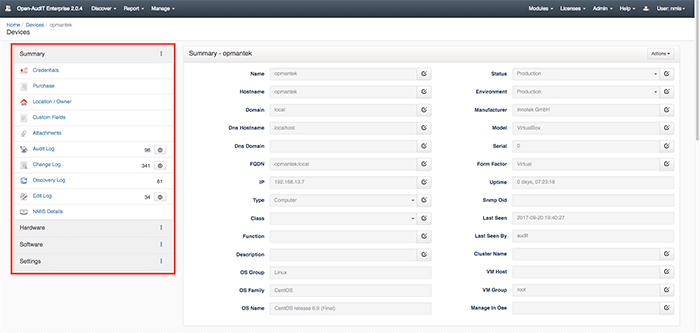

Through Open-AudIT you gain a lot of insight into your network, the devices attached, as well as the software that is running on the devices. There is a lot of information that is collected, the difficult part is deciding what information is valuable to your organization. For example, a business may be interested in the new devices that are connected to their network or new software that has been installed recently. This information can be collected automatically and at specific times, wrapped up in a nice bow and then emailed to you.

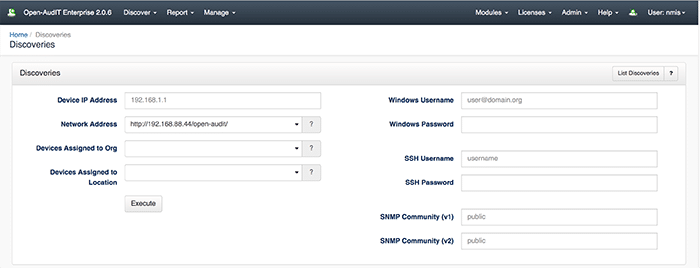

Once you have Open-AudIT installed and have a device discovered you can create a scheduled report on that information. To create a report the first step is ensuring that the correct email details are in place. Navigate to ‘Admin’ in the menu bar on the top right, then ‘Configuration’ and finally ‘Email’ and you will have the email configuration screen loaded. Ensure all the details are correct and send a test email to yourself to make sure it is working.

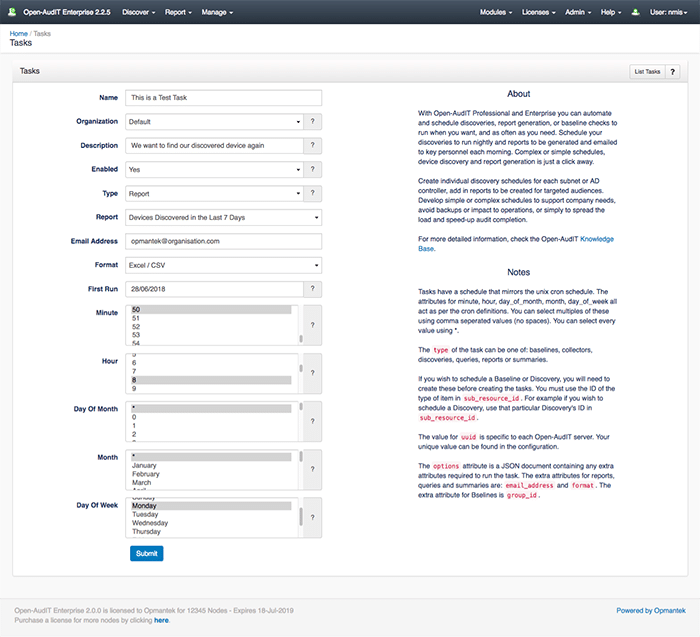

Now we get to the fun stuff, that will make your life easier, while everyone thinks you are working double time. Open-AudIT uses ‘Tasks’ as the title for its automation, a task list can be found in ‘Admin’ and then ‘Tasks’, then ‘List Tasks.’ From this screen, you can set up the following types of tasks, Baseline, Discovery, Report, Query, Summary or Collector. In our previous post we did a single device discovery and had some success, let’s do that again but we will schedule it for Monday morning.

Click on ‘Create’ on the top right and you will have all the options for scheduling available to you, essentially this is the same as a manual process, just adding in the time element. Enter a nice test name (this can always be edited later) and for type, we want a report. This will add an additional menu item and we want the report titled ‘Devices Discovered in the Last 7 Days.’ See below for what it should look like. I have created this task to run every Monday morning at 8:50 am, this is perfect coffee drinking reading material.

If you configure this correctly, come Monday you will have a nice CSV report to look at, with one entry for our discovered device. However, in the future, this could be scaled to your organisational size, and before you even start your day, automation has completed a job for you. This demonstration is for one of the fantastic features inside Open-AudIT, there is more that is available too. Open-AudIT has a 20 device trial license for you to test out the features. If you would like a larger trial license don’t hesitate to contact us or even request a demo, we can help you get more wins every day.